Small vs. Large Language Models: Which is Right for Your Business in 2025?

The artificial intelligence landscape has undergone a dramatic transformation in recent months. As enterprises rush to implement AI solutions, a critical question has emerged: should businesses invest in large language models (LLMs) or explore the emerging world of small language models (SLMs)?

This choice impacts everything from operational costs, data privacy, and deployment speed. As enterprise language models become integral to competitive strategies, understanding SLMs vs LLMs is essential for making informed decisions that align with your business goals. With the Enterprise AI Market expected to reach $97.20 billion in 2025 and grow at a CAGR of 18.90% to reach $229.30 billion by 2030, making the right choice has never been more important.

Whether you're a startup looking for on-premise AI models or an established enterprise evaluating enterprise language models, this article will help you navigate SLMs vs LLMs. We'll draw on credible sources, practical examples, and case studies to provide actionable insights. By the end, you'll have a clear framework for AI model selection that could optimize your operations and boost conversions through smarter AI integration.

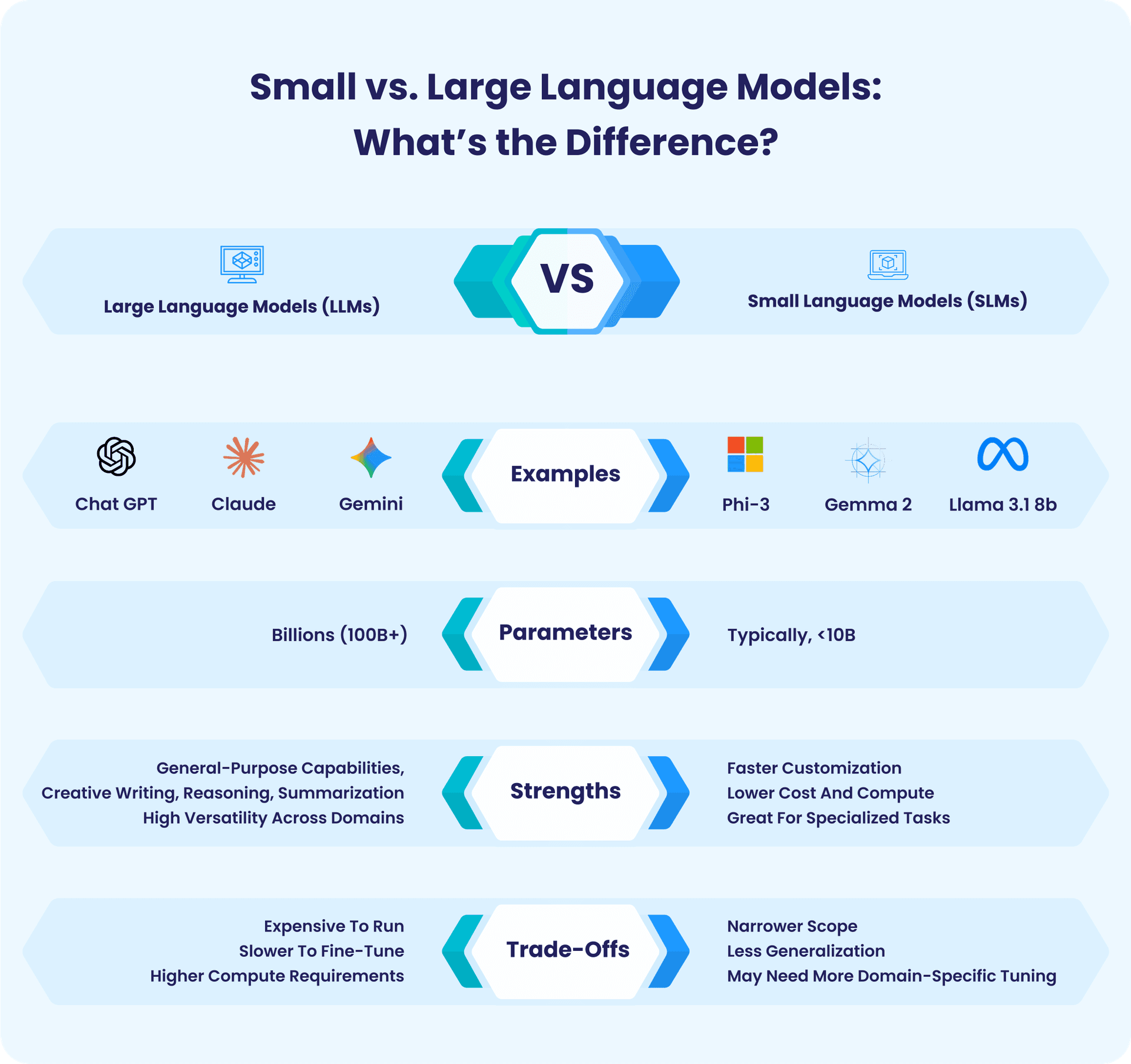

What is the Difference between Small Language Models and Large Language Models?

Before diving into AI model selection, let's clarify what distinguishes these two approaches.

- Large Language Models (LLMs): are the powerhouses you've likely heard about; models like GPT-4, Claude, and Gemini that contain billions of parameters, and can perform a vast array of general-purpose tasks.

They're versatile, incredibly capable, and can handle everything from creative writing to complex reasoning.

- Small language models (SLMs): on the other hand, are smaller versions of LLMs that have more specialized knowledge, are faster to customize, and more efficient to run. Models like Phi-3, Gemma 2, and Llama 3.1 8B typically contain fewer than 10 billion parameters but deliver impressive performance for specific use cases.

Think of them as focused specialists rather than generalists.

The distinction matters because LLMs are versatile, large-scale models capable of general-purpose tasks but require significant resources, while SLMs are efficient, domain-specific models optimized for precision and smaller datasets.

What Are the Real Costs of Implementing Enterprise Language Models?

One of the most overlooked aspects of AI model selection involves the true cost of deployment. Many businesses focus on the sticker price of API calls or model licensing, but the reality is far more complex.

For every dollar spent on AI models, businesses are spending five to ten dollars on hidden infrastructure, including data engineering teams, security compliance, constant model monitoring, and integration architects necessary to connect AI with existing systems.

This reality has forced many organizations to reconsider their approach to language model deployment.

While LLMs offer impressive capabilities, they come with substantial overhead:

- Infrastructure costs: LLMs require significant computational resources, often necessitating expensive GPU clusters or high-tier cloud computing subscriptions.

- API expenses: Token-based pricing can quickly escalate with high-volume applications.

- Latency issues: Round-trip times to cloud-based LLMs can impact user experience.

- Data transfer costs: Sending large volumes of data to external APIs adds up rapidly.

Consider a mid-sized enterprise processing customer service inquiries. With an LLM handling 10,000 conversations monthly, API costs alone could range from $500 to $2,000, before accounting for the infrastructure needed to prepare, send, and process the data.

Why Cost-Effective AI Models Are Gaining Traction

The emergence of cost-effective AI models represents a fundamental shift in how businesses think about AI deployment.

Small language models are redefining enterprise AI by offering faster, more efficient, and cost-effective solutions compared to LLMs, with their compact design enabling deployment on edge devices, allowing real-time decision-making without cloud dependency.

This efficiency translates into tangible business benefits:

- Reduced operational expenses: SLMs can run on standard hardware, eliminating expensive GPU requirements.

- Lower latency: On-device processing means near-instantaneous responses.

- Predictable costs: No surprise API bills or usage-based pricing fluctuations.

- Resource optimization: Smaller models consume less memory and processing power.

Take the example of a retail chain implementing AI-powered inventory management. An SLM fine-tuned for product categorization and demand forecasting can run directly on existing servers, processing data in real-time without external API calls. The cost savings over a cloud-based LLM solution could exceed 70% annually while delivering comparable accuracy for the specific task.

How Do On-Premise AI Models Protect Your Sensitive Data?

Data security and privacy have become paramount concerns, particularly in regulated industries like healthcare, finance, and government services. This is where on-premise AI models deliver significant advantages.

SLMs are the on-premise version of the generative AI world, offering cost reduction and making them far more secure and less vulnerable to data breaches as data does not need to leave an organization's borders.

For enterprises handling sensitive information, this capability is often mandatory.

Consider these scenarios:

- Healthcare: A hospital implementing an AI assistant to help doctors access patient information can deploy on-premise AI models that process queries locally, ensuring HIPAA compliance without transmitting protected health information to external servers.

- Financial Services: Banks using AI for fraud detection need real-time analysis without exposing transaction data to third-party APIs. Small language models can run within the bank's secure infrastructure, analyzing patterns while maintaining complete data sovereignty.

- Legal Firms: Law firms handling confidential client matters can leverage on-premise AI models for document review and legal research without risking attorney-client privilege through external data transmission.

How Do You Choose Between SLMs and LLMs for Your Business?

Choosing between SLMs and LLMs isn't a binary decision. The most successful enterprise language model strategies often involve a hybrid approach.

Here's a framework for effective AI model selection:

1. Assess Your Use Case Complexity

Choose LLMs when:

- Tasks require broad general knowledge.

- Complex reasoning across multiple domains is necessary.

- Creative content generation spans diverse topics.

- The application needs to handle unpredictable queries.

- You're building customer-facing chatbots that must address any topic.

Choose SLMs when:

- Tasks are domain-specific (customer service scripts, technical documentation, specialized analysis).

- Response speed is critical.

- You need predictable, cost-effective AI models.

- Data privacy and security are paramount.

- The application has well-defined boundaries.

2. Evaluate Your Infrastructure

Your existing technical infrastructure significantly impacts language model deployment decisions.

Cloud-first organizations with robust API integration capabilities may find LLMs easier to implement initially.

However, data from mid-2025 shows a majority of OpenAI users, including over 92% of Fortune 500 firms are deploying a range of models, frontier and specialized, in production workloads, reflecting broad adoption beyond purely frontier models.

On-premise environments naturally favor efficient AI models that can run on existing hardware.

Deploying language models on-premises offers reduced latency, data sovereignty, and supports regulatory compliance by keeping sensitive data within the local environment.

3. Calculate Total Cost of Ownership

Don't just compare API pricing. Consider:

- Initial setup and integration costs.

- Ongoing operational expenses (compute, storage, bandwidth).

- Team resources required for maintenance and monitoring.

- Scaling costs as usage grows.

- Hidden costs like data preparation and model fine-tuning.

While total IT budgets are going up by around 2% in 2025, AI spending is growing closer to 6%, making cost optimization increasingly important for sustainable AI adoption.

4. Consider Compliance Requirements

Regulated industries face unique challenges with AI model selection. Data residency requirements, audit trails, and privacy regulations may dictate your choice.

Key Compliance Factors to Consider:

- Data Residency: Where is your data stored and processed? Some jurisdictions require data to remain within national borders.

- Auditability: Can your AI model provide transparent logs and decision trails for audits and regulatory reviews?

- Privacy Regulations: Are you compliant with GDPR, HIPAA, or local data protection laws? This affects how personal data is handled by AI.

- Model Explainability: Can you explain how your model makes decisions? Black-box models may not meet regulatory standards.

- Vendor Risk: Are you using third-party models or APIs? Understand the risks of outsourcing sensitive data processing.

On-premise AI models often provide the clearest path to compliance, though some cloud providers now offer dedicated instances that address regulatory concerns.

Download the AI Model Selection Checklist for Enterprises

A strategic guide to help you choose the right AI architecture, while balancing performance, cost, compliance and scalability. Enter your email to receive the full PDF and bonus case studies.

What Are Real-World Examples of Successful SLM and LLM Implementations?

Understanding theory is one thing, but seeing practical implementations helps clarify when to use each approach.

SLM Success Stories: Where SLMs Deliver Big Impact

SLMs are gaining traction across industries for their efficiency, customizability, and cost effectiveness, especially in use cases where speed, privacy, and domain specificity matter.

Here are some industry-proven SLM applications that demonstrate the potential of this approach:

1. Clinical Note Structuring in Healthcare

- Use Case: A hospital deploys SLMs to convert doctor voice notes into structured clinical documentation. The models are trained on specialty-specific terminology and run on-premise to meet privacy regulations.

- Why SLMs Work: Fast, domain-specific processing with full data control and low infrastructure overhead.

2. Internal Policy Assistant in Banking

- Use Case: A bank uses SLMs to power an internal chatbot that answers employee queries about HR and compliance policies. The models are trained on internal documents and run on standard servers.

- Why SLMs Work: High accuracy for repetitive, rule-based queries with minimal compute requirements.

3. Product Tagging in Retail

- Use Case: An ecommerce platform uses SLMs to automate product classification and tagging for new inventory. The models are fine-tuned on historical catalog data and deployed on existing infrastructure.

- Why SLMs Work: Efficient handling of structured, repetitive tasks with fast deployment and low cost.

4. Safety Reporting in Construction

- Use Case: A construction firm deploys SLMs on mobile devices to generate structured safety reports from voice inputs. The models work offline and are optimized for edge environments.

- Why SLMs Work: Real-time, low latency processing in field conditions with no reliance on cloud connectivity.

5. Student Support Automation in Education

- Use Case: A university integrates SLMs into its portal to handle routine student queries about admissions, registration, and exams. The models are trained on institutional FAQs and run on campus servers.

- Why SLMs Work: High volume query handling with fast response times and reduced support team load.

These examples show how SLMs can deliver high-impact results when deployed strategically in domain-specific, high-volume, or latency-sensitive environments.

xLoop's experience developing xVision, a Computer Vision-based solution initially built for a banking client, demonstrates the power of specialized AI. The system monitors security guard attire, suspicious activity, and cleanliness in real-time, achieving a 40% reduction in security incidents. This targeted approach delivers better results than a general-purpose solution could provide.

When Do Large Language Models Make More Sense?

While SLMs offer efficiency and specialization, LLMs shine in scenarios that demand scale, versatility, and deep reasoning.

Here are key situations where LLMs are the better choice:

1. Complex Research Assistance

Use Case:

A pharmaceutical company uses LLMs to help researchers analyze scientific literature, generate hypotheses, and draft research proposals. The broad knowledge base and reasoning capabilities justify the higher costs for this high-value application.

Why LLMs Work:

- Ability to synthesize complex information.

- Supports reasoning across multiple disciplines.

- Access to broad domain knowledge.

2. Multilingual Content Creation

Use Case:

A global marketing agency leverages LLMs to create campaign content across 40+ languages and cultural contexts.

Why LLMs Work:

- Built-in multilingual capabilities.

- Cultural nuance and tone adaptation.

- Creative ideation across diverse markets.

3. Enterprise Knowledge Management

Use Case:

A consulting firm uses LLMs to build internal copilots that answer employee queries based on thousands of documents, policies, and reports.

Why LLMs Work:

- Can ingest and reason over large corpora.

- Handles ambiguity and context switching.

- Supports semantic search and summarization.

4. Advanced Reasoning & Decision Support

Use Case:

A financial institution uses LLMs to simulate market scenarios, assess risk, and generate investment strategies.

Why LLMs Work:

- Capable of multi-step reasoning.

- Can combine structured and unstructured data.

- Supports scenario generation and forecasting.

5. General Purpose AI Assistants

Use Case:

Enterprises deploy LLMs as internal copilots for HR, legal, IT, and operations.

Why LLMs Work:

- Versatile across departments.

- Handles diverse queries and tasks.

- Learns from feedback and improves over time.

If your case involves multiple domains, complex reasoning, multilingual output, or large-scale knowledge synthesis, LLMs are often the better choice despite their higher cost and compute requirements.

The Hybrid Approach: Can You Use Both SLMs and LLMs Together?

The most sophisticated enterprise language model strategies don't force an either-or choice.

Instead, they combine both to optimize performance, cost, and scalability across use cases. Here’s how:

- Routing Architecture for Intelligent Query Handling: Implement a system that assesses incoming queries and routes simple, domain-specific requests to efficient AI models while directing complex or unusual queries to more capable LLMs. This optimizes both cost and performance.

- Specialization Strategy for Task Optimization: Use small language models for high-volume, repetitive tasks where they excel (data extraction, classification, standardized responses), while reserving LLMs for tasks requiring creativity or broad knowledge.

- Staged Deployment for Agile Development: Start with LLMs for rapid prototyping and understanding use case requirements, then transition to fine-tuned SLMs for production deployment once patterns are established.

By integrating both SLMs and LLMs into your architecture, you can reduce operational costs, improve response times, maintain high-quality outputs, and scale AI across departments and use cases.

What Are the Latest Trends in Language Model Deployment?

Several trends in the AI landscape are reshaping how businesses approach AI model selection:

- Model Distillation and Compression: Model distillation trains smaller, more efficient 'student' models that replicate the behavior of larger 'teacher' models, while quantization employs lower-precision numerical representations to reduce memory and computational demands. This technology enables businesses to capture LLM-like performance in more efficient AI models.

- Edge AI Acceleration: Small language models deployed on edge devices overcome cloud dependency by reducing latency, bandwidth, and privacy risks through quantization, pruning, model optimization, and efficient inference for edge computing. This trend particularly benefits IoT applications, mobile deployments, and latency-sensitive use cases.

- Domain-Specific Pre-training: Rather than fine-tuning general models, organizations are increasingly investing in small language models pre-trained on domain-specific data. A legal tech company, for example, might train an SLM exclusively on legal documents, creating a more capable specialist than a general LLM could be for legal tasks.

- Agentic AI Systems: Recent research suggests that small language models are sufficiently powerful, inherently more suitable, and necessarily more economical for many invocations in agentic systems, making them the future of agentic AI. This has significant implications for enterprises building autonomous systems that make decisions and take actions.

xLoop's xServe demonstrates this principle in action. Initially built as a proof-of-concept for a leading UAE-based food chain, this autonomous order management system allows users to simply speak to the platform and place orders while receiving updates on deals, promotions, calorie counts, and other relevant information, all powered by efficient language model deployment.

What Are the Key Takeaways for Business Leaders?

The conversation around SLMs vs LLMs reflects a broader maturation of enterprise AI. Early adopters often chose the most powerful available models, but seasoned practitioners now understand that effective AI model selection requires matching capabilities to specific needs.

SLMs, being smaller and more focused, might be easier to audit and secure, providing greater control over data privacy and data security, while requiring less effort and resources to retrain and update due to their size.

This flexibility enables more organizations to adopt AI confidently, knowing they can start with cost-effective AI models and scale strategically as needs evolve.

As you consider your AI strategy, remember these essential points:

- There's no universal answer: The right choice depends on your specific use cases, infrastructure, budget, and compliance requirements.

- Total cost matters more than sticker price: Factor in infrastructure, integration, maintenance, and scaling costs when evaluating options.

- Security and privacy aren't optional: For sensitive data, on-premise AI models often provide the only viable path forward.

- Hybrid approaches deliver optimal results: Strategically combining small language models and LLMs lets you optimize for both cost and capability.

- Specialization drives efficiency: Domain-specific efficient AI models often outperform general-purpose solutions for focused tasks.

- The landscape keeps evolving: Stay informed about new models, deployment options, and optimization techniques.

- Start focused, then expand: Begin with well-defined use cases that deliver clear ROI before expanding to more ambitious applications.

Choosing the Right AI Strategy for Your Business

Whether you're just beginning your AI journey or looking to optimize existing implementations, the choice between small language models and large language models represents a critical strategic decision.

The right approach balances capability, cost, security, and scalability to deliver genuine business value.

At xLoop, we've helped organizations across banking, healthcare, logistics, and retail navigate these decisions. From deploying on-premise AI models for sensitive financial data to building hybrid architectures that optimize for both performance and cost, we understand that successful language model deployment requires more than technical expertise; it demands strategic thinking aligned with your business objectives.

Schedule an AI Strategy Session with Our Team

Explore tailored strategies for overcoming integration, governance and scalability challenges in your AI journey.

FAQs

Frequently Asked Questions

About the Author

Abdul Wasey Siddique

Software engineer by day, AI enthusiast by night, Wasey explores the intersection of code and its impact on humanity.

Table of Contents

Newsletter Signup

Tomorrow's Tech & Leadership Insights in

Your Inbox

Discover New Ideas

Why Most Enterprise AI Projects Stall After the Pilot (And How to Fix It)

Building Scalable AI Infrastructure: Lessons from Real-World Implementations

3+ Applications of Big Data in Healthcare (Real Examples)

Knowledge Hub