Building Scalable AI Infrastructure: Lessons from Real-World Implementations

While companies spent over $50 billion on AI infrastructure in 2025, many discovered that simply accumulating GPUs doesn't guarantee scalability. The real challenge isn't about having the most powerful hardware; it's about building systems that can efficiently handle inference workloads at scale while managing power, latency, and operational complexity.

The Post-GPU Bottleneck Strategy

Scalable AI infrastructure in 2026 has shifted from GPU-hoarding to Inference-Native Architectures.

Key lessons from real-world deployments include prioritizing liquid cooling (expected to drive 55% of hyperscale AI datacenter deployments), adopting distributed inference to reduce latency by 2.6-4.2x, and utilizing model distillation to lower infrastructure footprints amid rising power demands.

This represents a fundamental rethinking of how we approach AI infrastructure. The bottleneck has moved from compute availability to three critical areas: power delivery, latency optimization, and intelligent orchestration. Power constraints, network latency as a chokepoint, and orchestration gaps now limit AI scaling, with 55% of organizations citing infrastructure-technical bottlenecks

Companies that recognize this shift are building infrastructure that scales efficiently, while those still focused on GPU accumulation face mounting challenges with energy costs and operational complexity.

Lesson 1: The Fallacy of "Infinite Compute"

One of the most persistent myths in AI infrastructure is that you can always scale up by adding more powerful GPUs. The reality is far more complex. Vertical scaling—simply deploying larger, more powerful GPU clusters—hits hard walls at the enterprise level, and the primary constraint is not hardware availability or even cost. It is power.

AI clusters are facing serious grid interconnection delays and demand charges that fundamentally limit throughput. Datacenters cannot simply plug in another rack of H100s and call it a day. Electrical infrastructure, cooling systems, and grid connections become the real bottlenecks. In many cases, companies are discovering that their facilities literally cannot deliver enough power to run the hardware they have already purchased.

The solution lies in horizontal orchestration rather than vertical scaling. Kubernetes-based AI clusters have emerged as the standard approach, using tools like Horizontal Pod Autoscaler (HPA) and Vertical Pod Autoscaler (VPA) to dynamically optimize resources for fluctuating workloads. This approach treats compute as a distributed resource that can be intelligently allocated rather than a monolithic asset that must be maximally utilized at all times.

Real-world implementations show that horizontal orchestration can reduce infrastructure costs significantly while maintaining performance. Instead of provisioning for peak load across massive GPU clusters, companies can distribute workloads across smaller, more manageable units that scale based on actual demand. This is not just more cost-effective, but is often the only viable path forward when power constraints make vertical scaling impossible.

The key insight is that energy scarcity is forcing the industry toward efficiency over expansion. Companies that embrace this reality and build infrastructure around intelligent resource allocation are achieving better results with less hardware than competitors still chasing the "infinite compute" dream.

Lesson 2: Moving from RAG to "Long-Context Native" Systems

Retrieval-Augmented Generation (RAG) has been the go-to architecture for building AI systems that can access external knowledge. But as deployments scale, a silent killer emerges: vector database degradation. At scales of 100,000 pages or more, RAG precision can degrade by up to 12% due to fundamental limitations in similarity search algorithms.

This is not a problem you can solve by buying better vector databases. It is an architectural limitation of the RAG approach itself. As your knowledge base grows, the semantic search that powers RAG becomes increasingly imprecise, leading to retrieved context that is less relevant and model outputs that suffer accordingly.

The alternative gaining traction is Context Caching, a technique that stores and reuses context in LLM interactions rather than retrieving it fresh each time. This approach can slash API costs by 75–90% for repeated queries while completely eliminating the vector store overhead that causes RAG to degrade at scale.

Context Caching works by storing reusable context within the LLM’s processing pipeline, allowing subsequent queries to reference this cached information without going through the entire retrieval process. In deployments optimized for models like Gemini, companies are seeing 60% cost savings compared to traditional RAG implementations.

This shift represents a fundamental rethinking of how we build knowledge-intensive AI systems. Instead of treating external knowledge as something to be retrieved on-demand, long-context native systems treat it as something to be efficiently cached and reused. For high-volume scenarios where the same information is accessed repeatedly, this architectural change is now a necessity for maintaining both performance and cost-effectiveness at scale.

The technical implications are significant. Moving away from vector databases means simpler infrastructure, fewer moving parts, and more predictable performance characteristics. It also means that as context windows continue to expand, the systems being built today will scale more naturally with future model capabilities.

Lesson 3: The "Sovereign Stack" vs. Public Cloud

For regulated industries like finance and healthcare, the public cloud presents an impossible tradeoff: scale versus compliance. But in 2026, this false choice is being replaced by hybrid-cloud “Sovereign AI Stacks” that enable organizations to retain sensitive data on-premise while still leveraging cloud compute for processing.

In healthcare, this architectural approach protects clinical trial data across borders while still allowing researchers to use powerful AI models for analysis. Financial institutions use on-premise infrastructure for transaction processing while moving less-sensitive query workloads to private cloud environments. The key is maintaining zero security compromises while achieving scalability that would be impossible with purely on-premise deployments.

The sovereign stack concept recognizes that data sovereignty is not merely a regulatory requirement but a strategic advantage. Organizations that can demonstrate complete control over their data infrastructure can compete for customers and contracts that would be impossible to win with purely cloud-based approaches.

Real-world implementations show that hybrid approaches can actually improve performance while meeting compliance requirements. By keeping sensitive data close to where it is generated and moving compute-intensive operations to locations where resources are most efficiently available, organizations achieve better latency profiles than either pure cloud or pure on-premise approaches could provide.

This architectural pattern is becoming the standard for regulated industries because it is the only approach that scales without introducing compliance risk. As AI systems become more deeply integrated into core business processes, the ability to maintain data sovereignty while leveraging advanced AI capabilities becomes essential.

Lesson 4: Operationalizing LLMOps (The "Day 2" Problem)

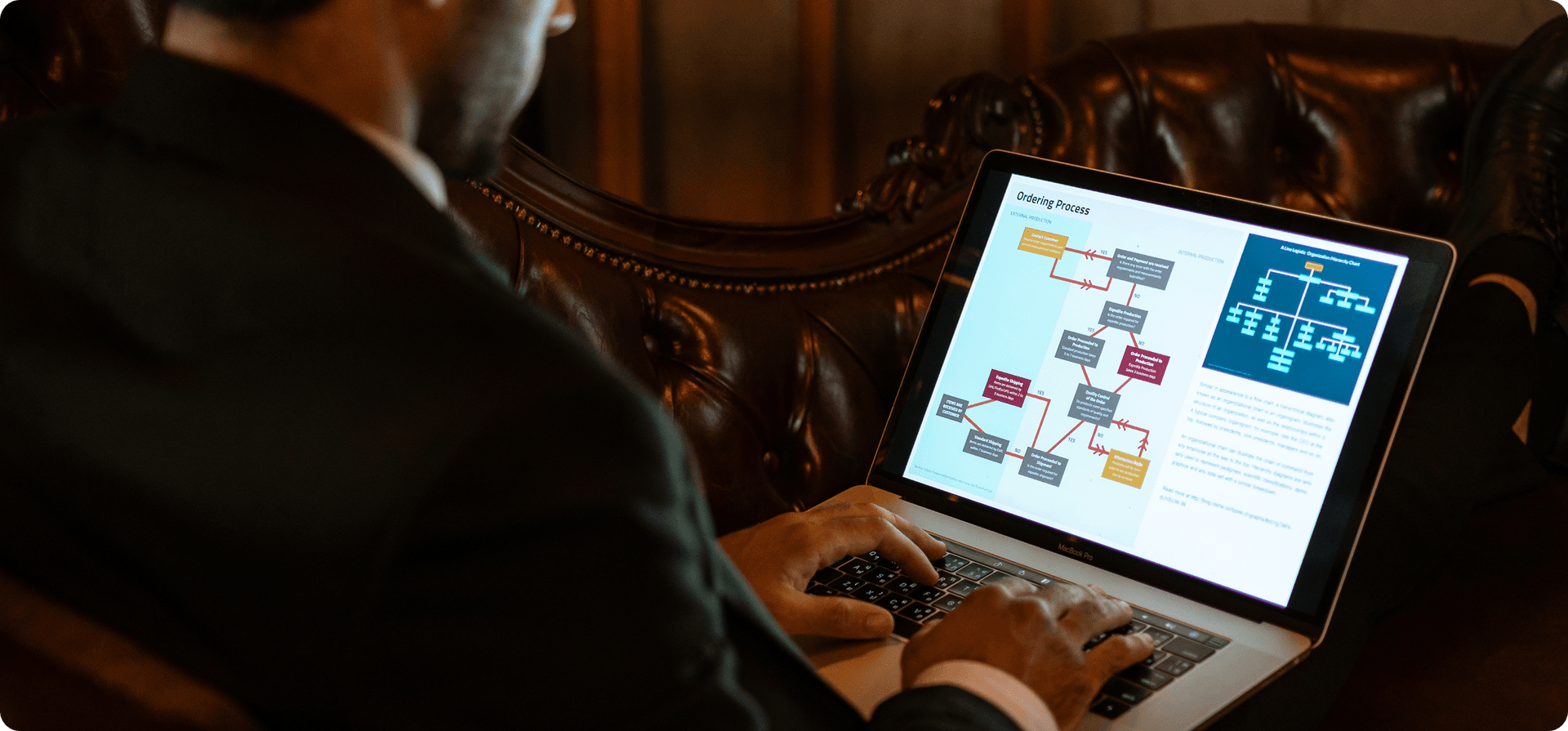

Getting an AI model into production is relatively straightforward. Keeping it running reliably over months and years—the "Day 2" problem—is where most implementations struggle. LLMOps addresses this challenge through three core capabilities: real-time drift detection, automated fine-tuning, and version control.

Model drift, which refers to degrading performance as data distributions change, is one of the most common causes of production failures. Continuous monitoring can reduce drift by 35–45% by catching issues early and triggering corrective actions before users notice problems. Systems with robust LLMOps practices report high availability compared to significantly lower uptime for manually managed deployments.

Automated fine-tuning is particularly powerful, often yielding above 10% performance gains by continuously adapting models to new data patterns. This is not about major retraining efforts, but about small, incremental adjustments that keep models aligned with current conditions.

Version control through CI/CD pipelines, using tools like Docker and Kubernetes, ensures that model updates can be deployed safely and rolled back if issues emerge. While this may resemble standard DevOps practice, applying it effectively to AI systems requires treating model weights and training data as carefully versioned artifacts, just like application code.

Feedback loops that trigger retraining based on performance metrics prevent the gradual degradation that occurs when models are deployed and forgotten. The most successful implementations treat AI systems as living artifacts that require ongoing care rather than static assets that can be deployed once and left alone.

Scalable vs. Non-Scalable Infrastructure

Understanding the difference between approaches that scale and those that don't requires looking at multiple dimensions:

| Aspect | Scalable (Inference-Native) | Non-Scalable (GPU-Centric) |

|---|---|---|

| Power Efficiency | Liquid cooling supporting 100kW+ racks | Grid bottlenecks with high demand charges |

| Latency Management | Distributed inference achieving 2.6–4.2x speed improvements | Vertical scaling causing delays |

| Cost Control | Model distillation providing 75% token savings | Operating under the "infinite compute" myth |

| Orchestration | Kubernetes HPA/VPA for dynamic scaling | Manual provisioning and resource allocation |

A clear pattern emerges as scalable infrastructure puts power, latency, and orchestration at the forefront instead of treating them as afterthoughts. Non-scalable approaches focus primarily on raw compute power and assume other constraints will work themselves out.

Lesson 5: Infrastructure-as-Talent (The CoE Strategy)

Perhaps the most overlooked aspect of scaling AI infrastructure is the human element. Traditional IT workflows simply cannot support AI workloads effectively. Modern AI infrastructure is code-defined and requires specialized teams that understand both the machine learning and infrastructure sides equally well.

The solution is the AI Center of Excellence (CoE), which refers to a team structure that bridges data scientists and DevOps engineers. Success requires team members who understand model weights as much as server racks, who can optimize training pipelines while also managing Kubernetes clusters.

Instead of hiring more people, it's more about building teams with fundamentally different skill sets than traditional IT requires. The "AI Engineer" role that's emerged, combines Python programming, neural network architecture knowledge, MLOps tools like MLflow, and Kubernetes expertise. These aren't separate specialists collaborating; they're individuals who span the entire stack.

Real-world data shows high failure rates for legacy infrastructure retrofits, with 70–85% of GenAI and AI deployment efforts failing to meet ROI within short timelines. The primary cause isn't due to technical limitations. It's organizational. Companies trying to scale AI with traditional IT team structures find that the knowledge gaps and communication barriers make it impossible to operate efficiently.

Successful CoE strategies embed MLOps practices directly into infrastructure teams, ensuring that operational concerns inform architectural decisions from the start. This creates systems that are designed for real-time adaptability rather than requiring manual intervention when conditions change.

Legacy IT Infrastructure vs. 2026 AI-First Infrastructure

The differences between traditional IT approaches and AI-first infrastructure extend across every dimension:

| Dimension | Legacy IT Infrastructure | 2026 AI-First Infrastructure |

|---|---|---|

| Architecture | Batch processing with manual MLOps | Inference-native with autoscaling |

| Team Skills | Server-focused administrators | AI engineers with MLflow and neural network expertise |

| Scalability | Data silos causing 60% project failures | Hybrid sovereign architectures with drift monitoring |

| Deployment | Static configurations requiring high rework | CI/CD with continuous retraining |

These are not minor differences. They represent fundamentally different approaches to infrastructure. AI first designs treat model deployment, monitoring, and adaptation as core capabilities rather than optional add ons.

Looking Forward

Building scalable AI infrastructure in 2026 requires rethinking fundamental assumptions about how systems should be designed and operated. The shift from GPU accumulation to inference-native architectures, from RAG to long-context systems, from public cloud to sovereign stacks, and from traditional IT to AI-first teams represents a clear maturation of the field.

Organizations that embrace these lessons—by prioritizing efficiency over raw compute, designing for Day Two operations from the beginning, and building teams with the right mix of hybrid skills—are discovering that AI can scale reliably and cost-effectively. Those that continue to approach AI infrastructure as traditional IT are finding their systems hitting limits that additional hardware alone cannot solve.

The future of AI infrastructure is not about having the most GPUs. It is about building systems that use compute intelligently, adapt automatically to changing conditions, and operate reliably at scale. That is both the central challenge and the real opportunity for organizations building AI systems today.

Transform Your AI Ambitions into Scalable Infrastructure with Expert Guidance

Talk to our specialists about power optimization, latency reduction, and building teams that bridge ML and DevOps.

FAQs

Frequently Asked Questions

Table of Contents

Newsletter Signup

Tomorrow's Tech & Leadership Insights in

Your Inbox

Discover New Ideas

The Future of Healthcare Portals: How AI Agents are Transforming Patient Engagement

Modernizing Manufacturing: Using Computer Vision for Real-Time Quality Control

3+ Applications of Big Data in Healthcare (Real Examples)

Knowledge Hub