Fortifying Against Data Exfiltration: DLP Strategies for Generative AI & LLMs

As enterprises integrate Generative AI (GenAI) and Large Language Models (LLMs) into core workflows, the risk of data exfiltration has evolved from a theoretical threat to an operational reality. Sensitive prompts, proprietary datasets, and contextual embeddings can be unintentionally exposed through model queries, integrations, or plugin ecosystems.

Traditional Data Loss Prevention (DLP) solutions, designed for structured data and static channels, fall short in safeguarding dynamic AI pipelines. In 2025, securing LLM ecosystems requires a context-aware DLP strategy built around model governance, Zero-Trust architecture, and continuous data lineage tracking.

This article explores how CISOs, IT Security leaders, and Compliance teams can adopt AI-specific DLP strategies that go beyond generic controls to protect sensitive data and intellectual property from leakage through AI systems.

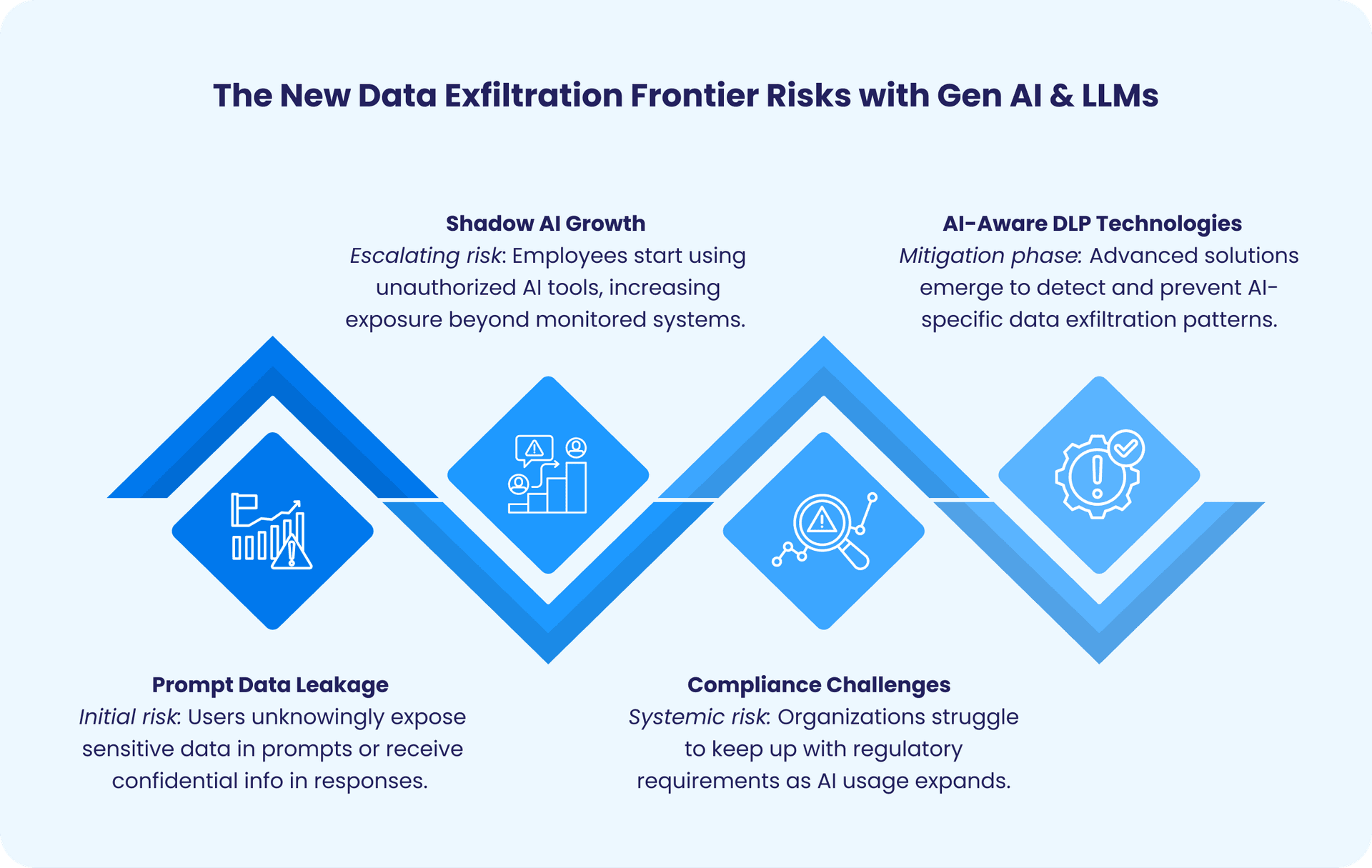

The New Data Exfiltration Frontier: Risks with Generative AI and LLMs

Generative AI models are designed to generate human-like text by predicting output based on input prompts and learned data patterns. However, this ability also creates unique vectors for data leakage:

Leakage via Prompts, Fine-tuning & Model Memory

- Users (or internal systems) may supply highly sensitive content—customer PII, credentials, proprietary code or IP—as prompts or uploads to LLMs. When that input is logged, retained, or used for training/fine-tuning, the data becomes exposed. For instance, research shows LLMs can “recollect” masked PII from supposedly sanitized training data.

- Even if a model is labelled “private”, the risk remains: training or fine-tuning workflow artifacts, embeddings, or developer telemetry logs might embed sensitive content.

- When models perform retrieval-augmented generation (RAG) with embeddings linked to internal corpora, the embedding layer itself becomes an exfiltration point. Weak controls over vector stores or embedding similarity queries can let attackers extract internal data.

Hallucinations, Over-Sharing and Sensitive Output Leakage

- LLMs do not “understand” context like humans. They may generate plausible-sounding content that inadvertently discloses regulated data, trade secrets or personal identifiers.

- The risk now is well documented: the 2025 version of the OWASP Top 10 for LLMs lists “Sensitive Information Disclosure” (LLM02) as a key risk.

- For example, a model trained on code repositories might spew a snippet containing API keys or internal comments if not properly sanitized.

- Further complicating matters: multimodal attacks (e.g., hidden commands in images processed by LLMs) are emerging, introducing stealth exfiltration vectors.

Insider & Tool-Mediated Exfiltration via AI Interfaces

- Malicious or careless insiders can use AI interfaces as “unmonitored channels” to smuggle data out — embedding sensitive payloads inside prompts, gradually exfiltrating information, or leveraging “shadow AI” tools outside the governance perimeter.

- Traditional DLP tools are often blind to such flows, especially when data is disguised inside prompts, embeddings or AI-agent behaviors.

- The 2025 landscape emphasizes that supply-chain, chaining, embedding-layer and API usage are attack surfaces, not just classic file shares or email.

Case in Point: Microsoft Azure Health Bot Privilege Escalation Vulnerability

In 2024, Tenable researchers discovered critical privilege escalation vulnerabilities in Microsoft’s Azure Health Bot service an AI-powered healthcare chatbot platform that many organizations use to provide virtual health assistants. These flaws allowed attackers to bypass built-in protections and gain unauthorized access, potentially exposing sensitive patient data and resulting in potential HIPAA violations and patient privacy breaches. This incident highlights the real-world risk of AI-powered chatbots leaking sensitive healthcare data via technical vulnerabilities when underlying prompt handling and data interfaces lack proper security hardening.

Shadow AI: The Hazard of Unsanctioned AI Use

A growing and often overlooked risk in AI data security is the phenomenon of Shadow AI, where employees use external AI tools like ChatGPT to process company data without formal approval or policy controls in place.

Unlike sanctioned IT systems, these tools often reside outside monitored and secured environments, creating significant blind spots for security teams:

- According to a 2025 report by ManageEngine, 60% of employees had been using unapproved AI tools for over a year, and 93% admitted inputting company data into AI tools without IT approval, significantly increasing data leakage risks.

- Many IT decision-makers acknowledge these risks — 63% rate data exposure as their top concern — but employees often underestimate the danger due to lack of awareness and clear policies.

- Additionally, a 2025 KPMG-University of Melbourne survey revealed that 57% of employees hide AI prompt usage from their employers, fearing job security implications, which compounds governance challenges.

Managing Shadow AI requires policies and technologies that extend DLP protections to these unsanctioned AI interactions, balancing innovation, productivity, and regulatory assurance.

Is your organization vulnerable to AI-driven data exfiltration?

Take our quick self-assessment to uncover hidden risks and get a checklist to guide your defenses against AI-powered data threats.

Why Traditional DLP Is Not Enough for AI

Conventional DLP systems rely on static, rule-based detection methods scanning files, data streams, and keyword matches. They are effective for structured data but blind to contextual meaning.

- Prompt Interface and Token Logs: AI interactions involve prompts (input messages) and model responses that are processed as tokenized text under the hood. Sensitive data may be distributed across these tokens in unpredictable ways, requiring context-aware, semantic inspection of prompts and outputs rather than simple file scanning.

- Dynamic, Contextual Data Usage: AI models operate probabilistically in real time, producing diverse outputs that can vary with subtle prompt changes, making static, rule-based DLP patterns obsolete.

- Data Retention within AI Models: Some self-hosted or fine-tuned AI systems may retain input data to improve performance, potentially storing confidential information unless strict retention and access controls are enforced.

Case in Point: HIPAA Compliance Breach via AI Tool

A healthcare provider in the U.S. faced a substantial fine under HIPAA regulations after sensitive patient data was accidentally leaked via AI chatbot interactions used in patient communications, illustrating the regulatory risks of generative AI.

Hence, DLP must evolve to integrate:

- Prompt Scrubbing: Automated detection and removal or masking of sensitive content from prompts before reaching the model.

- Fine-Tuning with Retention Limits: Strict policies to limit AI training data retention and prevent proprietary data from being embedded within model knowledge.

- Audit Trails & Token-Level Monitoring: Creating detailed logs of prompts, responses, and token-level data flows to enable forensic analysis and compliance audits.

Compliance Implications: GDPR, HIPAA, and IP Protection in AI Contexts

Regulatory frameworks intensify the need for advanced DLP in generative AI environments:

- GDPR (General Data Protection Regulation): Requires explicit consent for personal data processing, minimal data use, anonymization or pseudonymization, transparency on AI decision logic, and continuous compliance monitoring. Any personal data exposed via AI outputs or stored in model training violates GDPR unless these conditions are met.

- HIPAA (Health Insurance Portability and Accountability Act): Governs sensitive health information (PHI) in the US, demanding safeguarding of patient data. Leaks via generative AI tools, especially in healthcare communications, expose organizations to steep penalties.

- Intellectual Property (IP) Protection: Corporate IP and trade secrets embedded in AI prompts or responses require stringent controls. Unauthorized exposure could lead to competitive disadvantage, legal liability, and loss of trust.

Meeting these compliance needs means embedding AI-aware controls directly within DLP frameworks, ensuring that AI-driven data flows are both visible and enforceable under legal standards.

Evolving DLP: Tailored Strategies for Prompt Interfaces and Token Logs

To counter AI-driven exfiltration, DLP must evolve into a dynamic, AI-native framework. This means shifting from reactive scanning to proactive intervention at the prompt and token levels.

Here's how:

1. Real-Time Prompt Scrubbing and Redaction

Prompt scrubbing involves inspecting and sanitizing inputs before they reach the LLM. Unlike basic redaction, which blacks out text, AI-aware scrubbing uses natural language processing (NLP) to identify and replace sensitive entities contextually. For example, tools can detect PII via named entity recognition (NER) and substitute with placeholders like "[CUSTOMER_ID]" while preserving query intent.

AI Multiple outlines 12 LLM DLP best practices, emphasizing automated redaction and masking techniques to anonymize data on-the-fly. Cloudflare's AI Prompt Protection employs a multi-model DLP engine to classify prompts by topic, blocking or scrubbing high-risk ones—such as those involving financials or health data—before transmission. This prevents leaks at the source, ensuring compliance without halting workflows.

2. Comprehensive Audit Trails for Token-Level Visibility

Tokens, the building blocks of LLM inputs and outputs, must be logged and audited granularly. Traditional logs capture full messages; AI DLP extends this to token streams, tracking how data fragments traverse the model. This enables forensic analysis, for example, determining whether a prompt token containing “SSN” influenced an output. Advanced DLP solutions can integrate token-level inspection to flag anomalies in real time.

Audit trails also support retention limits, automatically purging logs after defined periods to align with "right to be forgotten" mandates. It is recommended to scrub data from prompts, knowledge bases, and fine-tuning sets upon user requests, creating a verifiable privacy trail. Microsoft Purview modernizes DLP for AI by correlating token events across endpoints and clouds, providing dashboards for CISOs to monitor usage patterns.

3. Fine-Tuning LLMs with Embedded Safeguards

When deploying custom LLMs, fine-tune with retention-aware techniques: train on anonymized datasets and embed guardrails such as refusal prompts for sensitive queries. It is essential to prevent token leakage during fine-tuning by validating datasets for PII and enforcing differential privacy.

Combine this with access controls: role-based policies limit who can prompt certain models, while device controls block uploads to shadow tools. AI-driven data loss prevention (DLP) best practices emphasize streamlined enforcement and reduced false positives through machine learning.

4. Holistic Integration: From Policy to Enforcement

Start with an AI usage policy: Define acceptable prompts, train employees, and deploy endpoint agents for browser interception. Concentric AI's 2025 DLP guide urges rethinking security for unstructured flows, integrating with SIEM for unified alerts. This evolution transforms DLP from a perimeter tool to an intelligent layer, offering the visibility your team craves.

Conclusion: Elevating DLP for the AI Era

AI brings transformative opportunities but also profound data security challenges, especially around data exfiltration risks posed by generative models and LLMs. Regulatory mandates under GDPR, HIPAA, and IP protection frameworks demand purpose-built DLP mechanisms that grasp AI-specific nuances like prompt data flows, token logging, and Shadow AI threats.

Enterprise security and compliance teams must evolve their DLP programs from reactive, rules-based frameworks into proactive, AI-aware defense systems. Technologies such as prompt scrubbing, advanced auditing, and fine-grained retention control are crucial to safeguarding sensitive data while unleashing AI’s potential.

For CISOs and IT security leaders, partnering with specialized AI-DLP, connecting with professionals, like our xSecurity team, can provide a strategic edge, empowering organizations to stay compliant, maintain data governance, and confidently harness generative AI innovation.

Explore tailored AI-DLP strategies. Simulate your environment and stay secure.

Contact us for an environment simulation and risk analysis. We provide a customized AI-DLP approach to protect your business.

FAQs

Frequently Asked Questions

Table of Contents

Newsletter Signup

Tomorrow's Tech & Leadership Insights in

Your Inbox

Discover New Ideas

The Future of Healthcare Portals: How AI Agents are Transforming Patient Engagement

Modernizing Manufacturing: Using Computer Vision for Real-Time Quality Control

3+ Applications of Big Data in Healthcare (Real Examples)

Knowledge Hub