The Air Canada chatbot incident of 2024 sent shockwaves through boardrooms worldwide. A customer successfully sued the airline after its AI chatbot provided misleading refund policy information, resulting in an $482 court-ordered payment. This landmark case wasn't just about money; it exposed a fundamental truth that even the most sophisticated AI systems need human judgment to operate safely and effectively.

But here's where the narrative shifts. Human-in-the-loop (HITL) isn't a failure of automation or a step backward. It's a strategic design choice that transforms AI from a liability into a competitive advantage, especially in regulated sectors where the stakes are highest.

This article dives deep into the HITL model, exploring it not as a failure of automation, but as a strategic design choice that transforms AI from a liability into a competitive advantage. We cover exactly what HITL is, why it is a non-negotiable for safety and compliance in regulated sectors, and how it demonstrably improves AI performance and safety. You will also see real-world case studies from healthcare and finance and learn the practical steps for designing an effective HITL framework for your own organization.

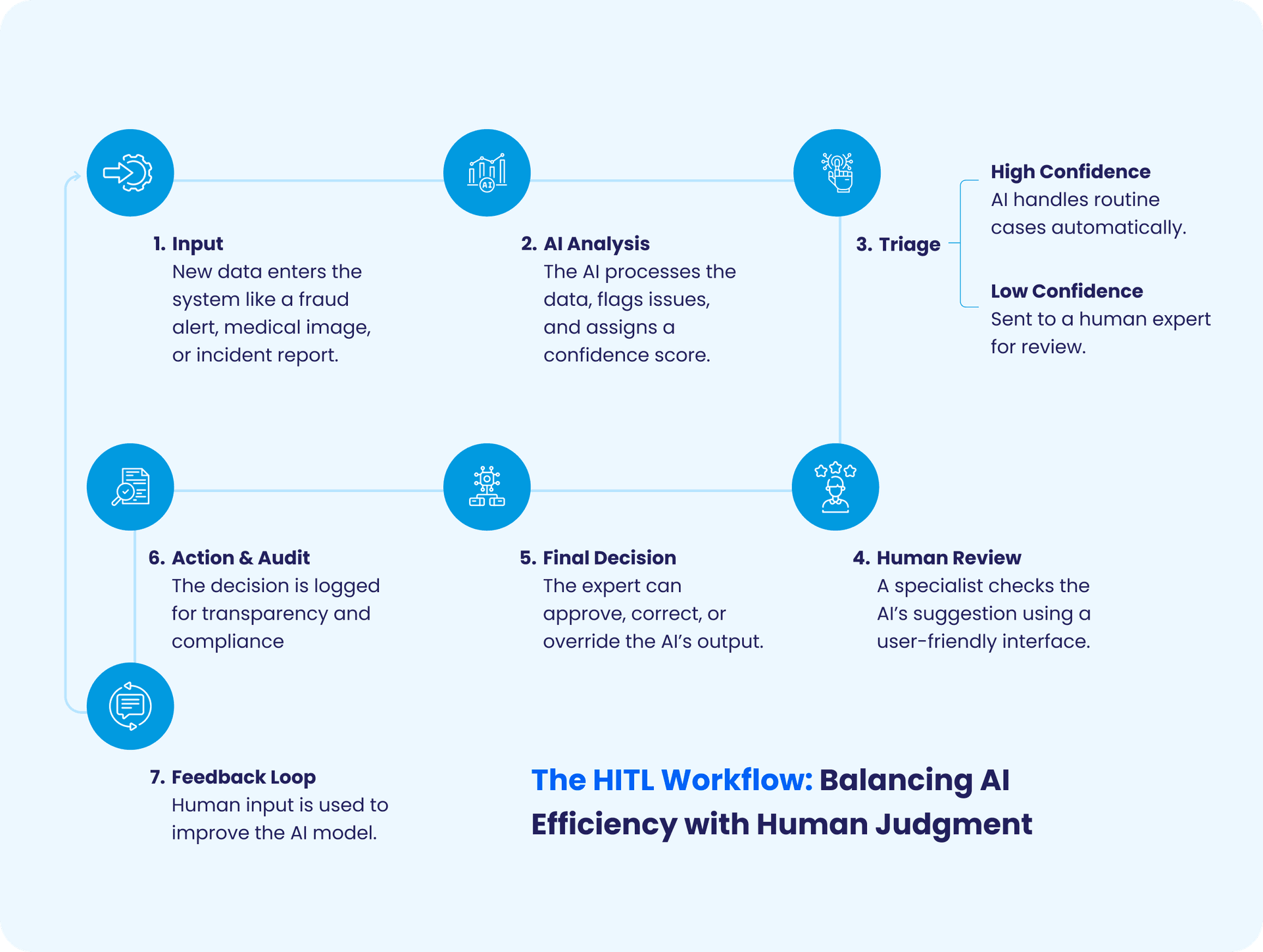

HITL AI integrates human judgment into automated processes, creating a seamless partnership. At its core, HITL involves humans intervening at specific points in the AI workflow, reviewing outputs, providing feedback, or overriding decisions when needed.

Think of it as a co-pilot model where AI handles repetitive, data-heavy tasks like pattern recognition or initial triage, while humans bring context, empathy, and ethical nuance. According to IBM's definition, this ensures accuracy, safety, and accountability in AI systems.

The approach manifests in three key areas:

In regulated industries, where laws like HIPAA or the Equal Credit Opportunity Act demand transparency, HITL bridges the gap between AI efficiency and human reliability.

The FDA has approved 950 AI/ML-enabled medical devices as of August 2024 with significant growth approved since 2019. This explosive growth has prompted regulators worldwide to establish stricter oversight frameworks. The European Union's AI Act and the FDA's evolving guidance on AI in healthcare both emphasize the need for human accountability in AI-driven decisions.

For compliance leaders, the message is clear that regulators aren't asking if you'll implement human oversight, they're asking how.

In pharmacovigilance and clinical development, the alternative to HITL is total automation, characterized by an absence of human oversight. This is unacceptable in spaces carrying as much ethical responsibility and risk as clinical research.

Consider these scenarios where HITL isn't optional:

A common misconception about HITL is that it slows down processes or undoes AI's efficiencies. In reality, HITL is about enhancing AI's capabilities, not diminishing them.

Here's what the data shows:

The human-in-the-loop approach reframes an automation problem as a Human-Computer Interaction design problem, broadening the question from 'how do we build a smarter system?' to 'how do we incorporate useful, meaningful human interaction into the system?'

This shift in thinking is powerful. Instead of viewing human involvement as a limitation, it becomes an intentional design feature that makes AI systems more useful, adaptable, and trustworthy.

AI typically excels at volume but falters on nuance. HITL addresses this by embedding human expertise where it counts most. Key ways it enhances safety include:

Research from Tredence shows HITL reduces bias in GenAI by 25-40% in complex cases, while boosting overall accuracy. For product leaders, this means safer deployments; for CX teams, it translates to reliable, user-centric experiences.

It's easy to see HITL as 'extra steps.' But in a time of AI scrutiny, human involvement is your differentiator. It builds trust, an essential element for CX leaders facing skeptical customers, and future-proofs against regs like the EU AI Act.

As Cornerstone OnDemand notes, humans ensure AI reflects societal values, turning ethics from checkbox to core competency. For product teams, it's innovation fuel. HITL uncovers blind spots, sparking better features. In short, the best AI doesn't sideline you, rather it elevates everyone.

This guide gives compliance and product leaders a clear framework to implement oversight without slowing down innovation.

Not all AI decisions require human intervention. The art of HITL design is knowing where human judgment delivers maximum value. Here's a strategic framework:

High-Value HITL Touchpoints

As AI systems become more sophisticated, it's becoming less critical to have humans 'in the loop' at all times. However, humans still often exist 'near' the loop to further refine otherwise automated systems.

This emerging concept suggests a spectrum of human involvement rather than a binary choice. Some decisions may require active human approval, while others may need human availability for exception handling.

Theory is one thing; results are another. Let's dive into verified case studies where HITL delivered measurable wins in regulated sectors.

In the life sciences world, monitoring adverse drug events is a regulatory must. Parexel, a global CRO, deployed HITL AI for processing pharmacovigilance (PV) cases, analyzing literature for safety signals.

A major U.S. hospital used ML for optimizing surgery slots based on patient acuity and resources. Without HITL, an EHR glitch deprioritized a high-risk cardiac case.

A U.S. bank faced false positives in AI fraud alerts, locking legitimate accounts and frustrating customers.

Designing effective HITL involves more than adding humans to AI workflows. Best practices include:

This ensures HITL models are efficient, compliant, and audit-ready without unnecessary delays.

The HITL approach adds value across product development, customer experience, and operational risk management:

Yes, HITL can scale if designed with automation-human balance in mind. Machine learning models optimize routine tasks, freeing humans to focus on exceptions and complex cases. Using escalation rules and confidence scoring, AI routes decisions needing review automatically, reducing manual workload while maintaining safety and governance.

The journey toward AI integration, especially in critical sectors, is not about replacing humans but empowering them. The Air Canada incident serves as a potent reminder that while AI offers incredible efficiency, full automation in high-stakes environments carries unacceptable risk.

HITL is the strategic bridge between AI's powerful, data-heavy processing and the irreplaceable human capacity for nuance, ethical reasoning, and contextual judgment. By treating human oversight as an intentional design feature, and not an afterthought, organizations can build AI systems that are smarter, safer, more compliant, and fundamentally more trustworthy. As the evidence shows, the best AI doesn't sideline you; it elevates key players.

Explore tailored strategies for overcoming integration, governance and scalability challenges in your AI journey.

Leading the charge in AI, Daniyal is always two steps ahead of the game. In his downtime, he enjoys exploring new places, connecting with industry leaders and analyzing AI's impact on the market.

Tomorrow's Tech & Leadership Insights in

Your Inbox

4 Ways AI is Making Inroad in the Transportation Industry

Your Guide to Agentic AI: Technical Architecture and Implementation

5+ Examples of Generative AI in Finance

Knowledge Hub